What is data management? - Practical Guide

1. What is data management?

How is data management defined?

Data management is the end-to-end process of collecting, processing, storing, sharing and using data across an organization and its ecosystem. Data management processes need to be secure, efficient, and compliant with regulations in order to be effective and to add value to the organization. They must scale data consumption by both humans and AI in order to increase business value.

As data becomes central to organizational success, and data volumes, and complexity increase, data management is now a vital discipline for both the public and private sectors.

An enterprise data management plan has to cover:

- The creation and collection of data across the organization

- How and where data is stored, both on-premise and in the cloud

- Processes to keep data available for use

- Data privacy, security and backup

- Compliance requirements, especially around regulations, retention and protecting customer information

- Data integration, processing, enrichment and transformation

- The creation of ready-to-use, high value data products to scale consumption

- Data sharing and delivery with humans and AI, both internally and externally through data product marketplaces

- Monitoring performance and usage, constantly driving improvements

Why data management is the foundation for a successful data product marketplace

Effective data management is vital to both governing data and ensuring it can be shared easily across the business, with partners and publicly through data product marketplaces. Data management processes collect, store, categorize, enrich and prepare data, enabling the creation of high-value, readily consumable data products. These, along with other data assets, can then be shared through intuitive, self-service data product marketplaces which scale data consumption by making relevant information readily available in the right formats to all users, irrespective of their role or level of technical skills.

What data do organizations have to manage?

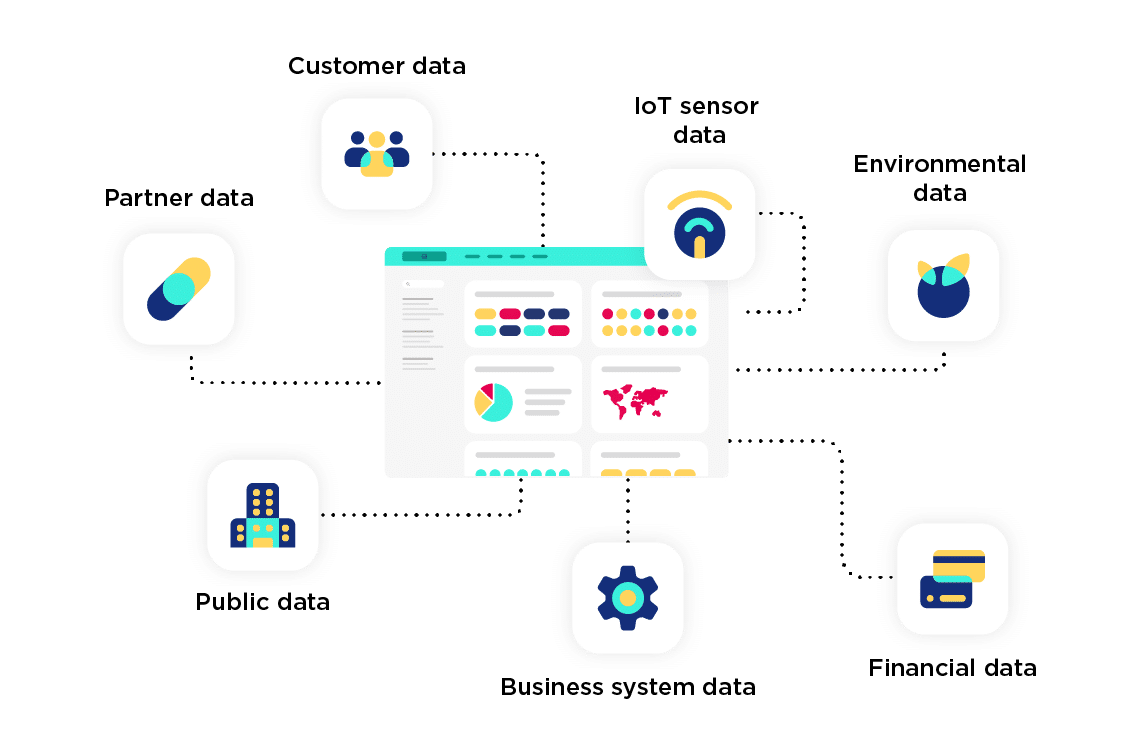

In an increasingly digital world, data now comes from an ever-growing number and variety of sources both within and outside the organization. More data is being produced, more quickly, from more sources, than ever before.

This data can be:

- From internal business systems such as finance, sales and CRM

- Unstructured data such as videos, audio files or word processing documents.

- From the systems of partners within your ecosystem

- From new external sources such as social media and Internet of Things (IoT) sensors, smart devices or video cameras.

- From official sources, such as government, demographic or geographic information

As well as the range of sources, the volume of data is increasing exponentially, requiring a data management architecture that can scale to potentially handle terabytes of data (also known as big data management).

Why do organizations have to manage data?

Today data is critical to business success and to gain value from it, organizations must manage it successfully and efficiently, using strong data management principles to protect, prepare and share it. Not overcoming data management challenges means that the potential value in the data will be lost, hitting competitiveness and hampering success.

Failure to successfully manage data leads to:

- Little knowledge of what data an organization has, where it is stored and how it can potentially be used

- Poor technical performance across the data stack, including slow data processing

- Challenges to meeting compliance regulations

- Reputational damage and cybersecurity risk due to data leaks/poor security

- Data silos, where data from different systems is not integrated or made available to all

- Duplication of data and increased storage/processing costs

- An inability to share reliable data across the business, particularly with business teams without data skills

- Issues with AI projects caused by incomplete, inaccurate or poorly categorized data

2. How CDOs use data management to drive business value ?

In a data-driven world, harnessing data is essential to competitiveness. By following data management best practices, organizations benefit from:

Better decision-making

Through access to more complete information, managers can make better, more informed decisions based on high-quality management data. By having a 360 degree view of business performance, organizations can anticipate potential issues and risks and take preventative action. Access to large volumes of high-quality, complete data from a full range of sources is central to taking advantage of machine learning (ML) and artificial intelligence (AI) algorithms. These automate and scale decision-making and enable organizations to predict future events and respond in real-time. With the rise of generative and agentic AI, decision-making relies even more heavily on good quality, available and easily consumable data.

Greater efficiency

Through immediate access to up-to-date data, employees are able to work more productively and effectively. They do not have to waste time searching for different data assets from other departments or systems or manually entering information from other sources. This means they can focus on using data to do their jobs more effectively, helping overall competitiveness and improving the employee experience, as time-consuming manual processes are eliminated. Insights from data can also be used to optimize performance across the business, further increasing efficiency gains, as well as automating processes through AI.

Reduce costs

Volumes of data are increasing rapidly. All of this information needs to be stored, processed and protected, adding significantly to IT overheads and costs. An effective data management strategy is able to monitor and eliminate duplicate data from across the organization, breaking down data silos between departments. This reduces issues caused by data duplication and means that the organization requires less data processing or storage capacity. Centralizing external data can also reduce costs as it removes duplicate purchases from multiple departments. Having a single data management strategy can also remove administration time and free up staff for other roles.

Greater innovation

Data is the fuel for increased collaboration between teams, departments and partners. Bringing together data from different areas and systems (such as sales, HR and customer service) powers the creation of new, innovative uses for data, driving the creation of new products and services, new ways of working and better service to customers and citizens. It breaks down barriers between departments and enables knowledge to be shared more widely across the organization, as well as with partners within the wider ecosystem, citizens (in the case of the public sector) and other stakeholders.

Enable compliance

Data, particularly personally identifiable information (PII) is rightly subject to stringent regulations, such as GDPR and CCPA. Organizations must therefore implement data security management to keep information safe and secure, with clear processes and audit trails to demonstrate who has access to datasets and for what purposes. This requires an effective data management strategy to map all data, ensure it is protected, and is only used for specified purposes. This enables regulatory compliance and protects brand reputation by demonstrating a strong commitment to safeguarding customer and employee data.

Improved customer experience and greater transparency

Understanding and meeting customer needs is crucial to business competitiveness. Improving the customer experience is a continuous process that relies on access to holistic data from across the customer journey. Having a complete picture of customer data through data management enables companies to monitor and improve the experience they offer, and to personalize how they meet the needs of specific customers and groups. As AI legislation is rolled out, regulators are also demanding greater transparency of which data is used to power AI models and agents to guard against inaccurate or biased results.

Data is also essential to demonstrating transparency, whether around corporate activities (such as for ESG/CSR reporting) or within the public sector. Customers and citizens want to be provided with a complete picture of how the organizations they interact with are delivering on their objectives and meeting their needs – requiring strong management of data across the business.

3. Data governance vs data management

What is data governance?

Data governance is a key component of an effective data management strategy. It covers the policies and procedures around how you identify, organize, handle, manage, and use the data collected in your organization across its entire lifecycle.

The Data Governance Institute defines data governance as “A system of decision rights and accountabilities for information-related processes, executed according to agreed-upon models which describe who can take what actions with what information, and when, under what circumstances, using what methods.”

How is data governance different to data management? Essentially governance sets the strategic principles and frameworks that are used to manage data, while data management solutions actually tactically carry out the process of managing that data.

The benefits of data governance

Data governance delivers both proactive and reactive benefits:

Proactive benefits

It creates new value from data by encouraging data sharing through breaking down silos between departments and providing staff with greater access to data. It also encourages the creation of a data culture by building a shared understanding and vocabulary around data.

Reactive benefits

Data governance is essential to ensuring that all data is secure, well-protected, compliant with regulations, is accessed and used correctly and is accurate. This manages its use and reduces risks around data, especially around AI projects.

Structuring a successful data governance program

Many data governance programs fail to deliver results, due to a perceived lack of business value and internal resistance from departments who see it as interference in their activities. This means that companies fail to gain the full benefits of their data management strategy.

What structure and competencies do you need for effective data governance?

Overcoming these challenges requires organizations to adopt the right structure for their program and to gain internal buy-in from both management and other teams. Putting the right structure in place ensures that data governance programs are linked to business objectives and have the broadest possible involvement from data owners from within the company.

Data governance teams must:

- Be headed by a senior leader, such as the Chief Data Officer (CDO) or equivalent. They must be directly involved in the program and have overall responsibility for its success. They must champion the project internally.

- Involve other senior management to set strategy and monitor performance through a Data Council. It is important that senior management are seen as highly visible supporters of the project

- Be managed day-to-day by a dedicated Data Governance Officer with a combination of technical, business and collaboration skills

- Involve data owners and practitioners from across the organization, responsible for ongoing activities and the setting and enforcement of rules

What framework should you adopt for data governance?

The details of data governance frameworks will differ between organizations, depending on their specific maturity, needs and industry. However, they should all contain certain key elements to ensure data is effectively managed from end-to-end across your organization:

- Clear aims, objectives, and responsibilities, focused on the business value governance provides

- Detailed policies covering data discovery, availability, integrity, quality, security, cataloging, usability, and access/sharing

- A common way and vocabulary to describe data across the organization, for example to ensure that terms are used consistently between departments

- A set of rules to cover how data is handled, standards for quality, metadata definitions, access rights and usage. The right technology to drive these rules and processes, such as granular access management and metadata management

- An organizational model/structure and team to manage data and enforce governance

- A full communication and training program for all users on what data governance means and why the program is important

- Ongoing measurement of activities against business-focused KPIs

Read more about achieving data governance success in our blog.

4. The data management lifecycle – step by step

The data management process covers the entire end-to-end process from first creating or collecting raw data all through to its usage, sharing and consumption across the organization and beyond. Organizations use a range of data management tools for this data lifecycle management.

Data collection

Data is created or collected from individual sources. These could be business systems (such as CRM, HR or sales), production systems (machines in factories), IoT sensors (for example collecting traffic or environmental data), or third-party data (provided by partners through APIs or other means). At this stage data should be checked to ensure it means data quality standards and data governance policies.

Data integration

Data from multiple sources and different systems is combined into a single repository, such as a data warehouse or a data lake, breaking down data silos. This enables easier cross-referencing and the elimination of duplicate data. As it enables better reporting and predictive analysis, data integration simplifies the decision-making chain for organizations.

Data preparation

Data is prepared for its use. It is first cleaned (data cleansing) which involves identifying and fixing incorrect, incomplete, duplicate, unneeded, or otherwise erroneous data in a data set. Processors are then used to standardize formatting (for example ensuring all dates are in the same format) and to anonymize any personally identifiable information.

Data enrichment

In the data enrichment/data transformation step additional information is added to enrich existing datasets to make it more usable and valuable. For example, organizations can add geographic, weather or other reference data to provide context to the data. The Huwise Data Hub contains over 30,000 datasets that can be used to enrich your own datasets.

Data sharing and exploration

If data remains in the hands of data analysts it does not unlock its full value. It has to be shared more widely amongst non-experts, whether business decision-makers, citizens or employees who require it for their day-to-day jobs. Raw data is only usable and consumable by data experts. In this form it cannot be easily understood by business teams without technical skills.

Data must therefore be transformed into compelling data visualizations and data products. Data products are high-value, ready to consume data assets, designed to meet exacting standards around their presentation, readability, quality, and reliability. They provide essential information in a ready to use format to a broad business audience.

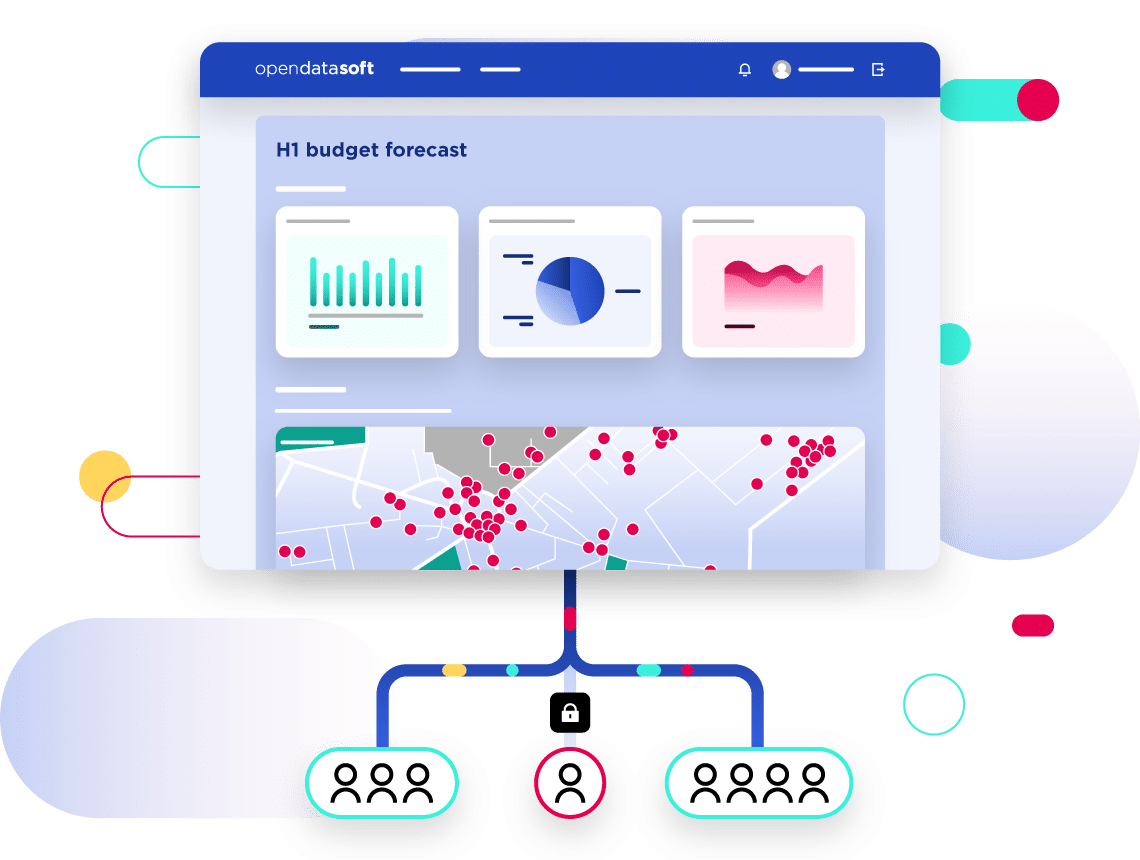

Sharing needs to be underpinned and enabled by intuitive, self-service data product marketplaces that provide a centralized, accessible source of data that combines ease of use with robust security and access controls. This enables seamless data exploration by all.

Sharing and data consumption is often neglected in data management strategies, reducing ROI from data. What is needed is to democratize data so that it can be shared and consume by everyone.

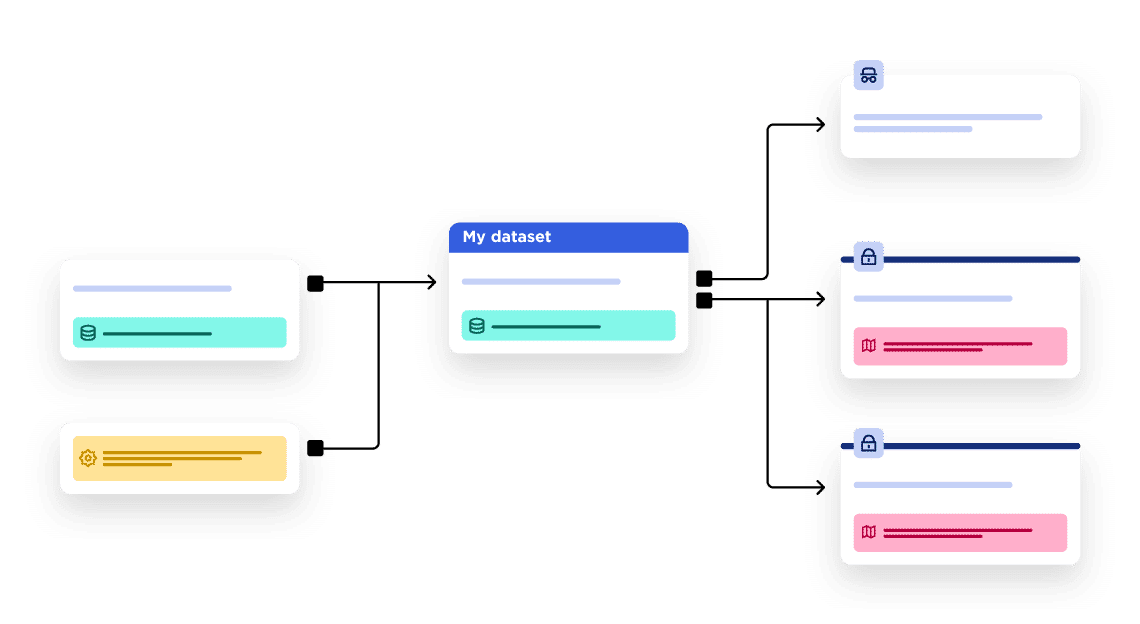

Data lineage

To improve data management it is vital to understand how data flows across your organization, and how and where it is used. Data lineage tools and dashboards provide this insight, delivering full traceability over data, better understanding the needs of users, demonstrating impact and performance against KPIs, while enabling data management strategies to be continually optimized and improved. Robust lineage is particularly vital for AI data use, providing an audit trail of what data has been consumed to power models and agents.

5. Increased data consumption: the essential step to data democratization

We live in a data-driven world, and access to usable information in understandable formats is vital for businesses, employees, citizens and partners. Data cannot be left solely in the hands of experts, such as business intelligence analysts, or require specific skills to access it and make sense of it. That is driving the importance of data democratization, the process of seamlessly sharing data with all in easily consumable ways that they can understand and make use of. There is more on data democratization and its importance in this blog.

The challenges to increasing data consumption

Unfortunately too many organizations currently lack the data management capabilities to truly democratize their data by driving its consumption at scale.

Firstly, data is simply not easily available in formats and locations that people can immediately access and use. Employees, citizens and partners struggle to find the data they require, as it is scattered across the organization in multiple silos and systems and is not easy to understand or work with, or is solely listed in static data catalogs. It is not in formats such as data products or available from intuitive data product marketplaces.

Secondly, they have not yet created a data-centric culture within the organization where data is seen as a crucial resource for all, and where staff are confident in accessing, understanding and reusing data in their daily working lives. Overcoming this obstacle requires a focus on training and culture to build confidence and ensure your people are data-driven.

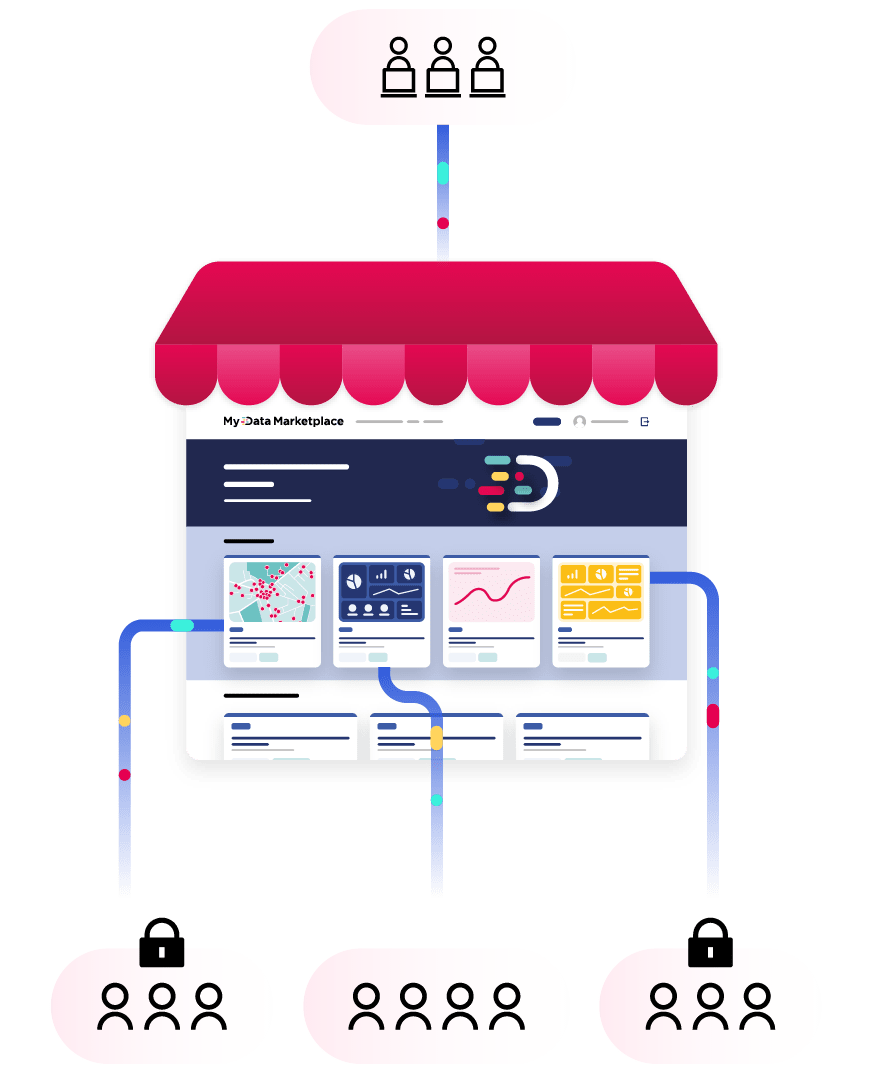

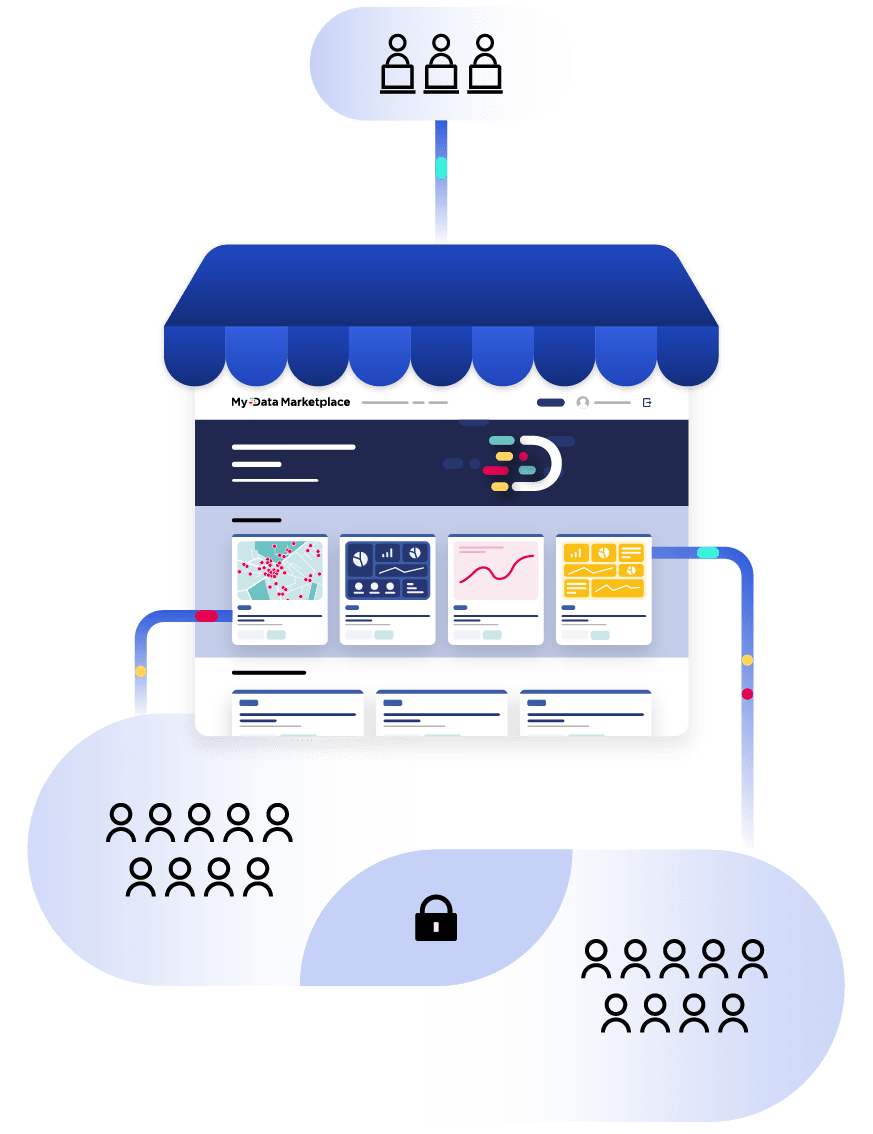

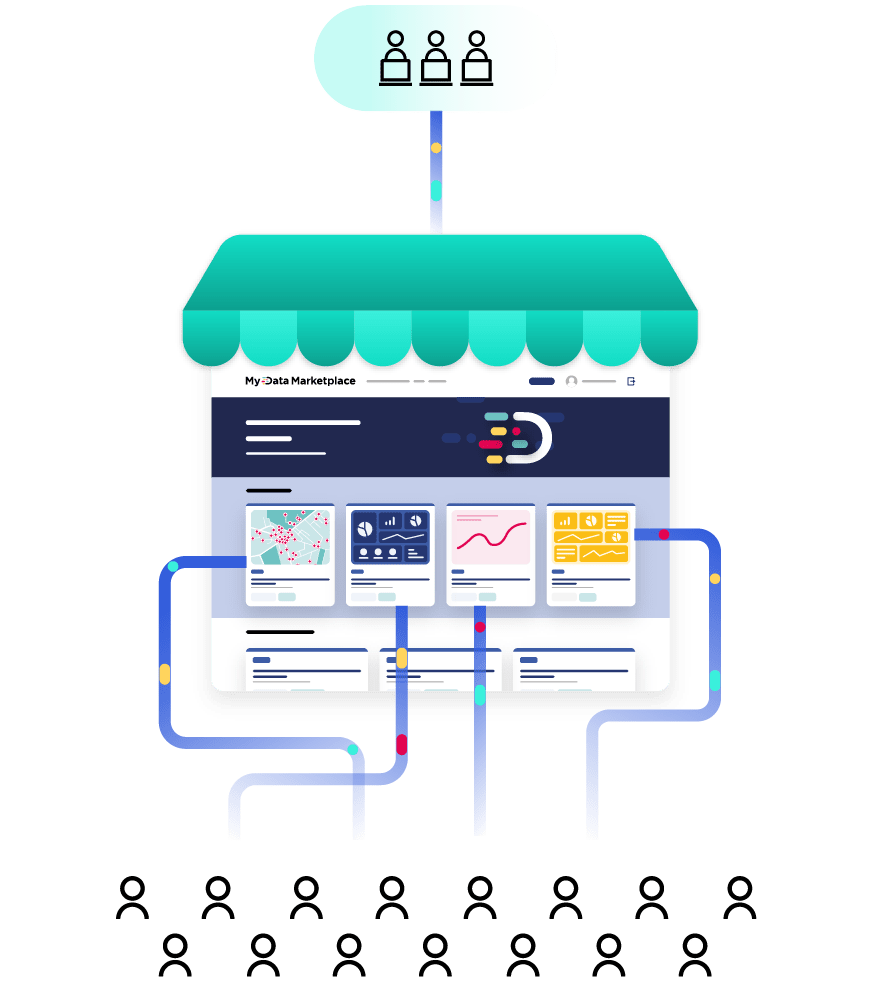

What is a data product marketplace?

Solving this challenge requires organizations to centralize access to their data, creating an intuitive, self-service data product marketplace one stop shop that contains all available data assets, along with the tools to reuse this information. Based on the same user experience principles as an e-commerce marketplace, data product marketplaces make finding and consuming relevant data as simple as straightforward as buying a product online.

Gartner has identified data marketplaces as an emerging category in its latest Hype Cycle for Data Management, with their adoption being driven by the rise of AI and data products. In 2025 Huwise was included as a sample vendor in this category, under its previous name of Opendatasoft. Read more about the Hype Cycle here.

Depending on the audience for the data, there are three main types of data product marketplace used to centralize and share data:

Internal data product marketplace

Creating an internal data marketplace is a powerful way of bringing together all data assets, including data products, from across the organization and making them available to employees. It breaks down internal data silos, simplifies information sharing and powers AI models and agents. An internal data marketplace has to have an engaging, self-service user interface, make it simple to discover data and provide all employees with confidence that data is accurate, high quality and meets their needs, without requiring training or support. To protect confidentiality and prevent misuse, internal data marketplaces should have built-in access controls, ensuring that employees cannot view personal or other data that is not relevant to their role. Data must be machine readable so that it can be easily consumed by AI agents and models.

B2B data product marketplace

Solving business and societal challenges, such as around decarbonization and effective supply chains, requires an ecosystem approach. Organizations need to work with their suppliers, partners and other stakeholders, collaborating through data. Creating a B2B data product marketplace enables this collaboration, providing datasets that can be enriched or used by partners, deepening relationships and driving innovation. B2B data marketplaces also enable organizations to monetize their data, creating new services that they provide to partners and customers, either as datasets or through dashboards. These add value to their offerings and create new revenue streams.

Public data product marketplace

An online site available to everyone through the internet, a public data product marketplace shares all of an organization’s relevant data assets with the world, strengthening relationships with key stakeholders and building trust. Originally created by public sector bodies to increase transparency by sharing information on their activities with citizens, and to meet compliance requirements, public data marketplaces are increasingly being rolled-out by businesses. They recognize the benefits of being transparent to demonstrate how they are performing against key metrics, such as their Environmental, Social, and Governance (ESG) and Corporate Social Responsibility (CSR) goals. A public data product marketplace should be comprehensive and easy-to-use with a strong search function. Examples of public data marketplaces include open government, smart city, CSR/ESG portals and transparency hubs.

Making it easy to consume data

Simply making data available through a marketplace is not enough to deliver data democratization or increased consumption by humans and AI. It must be easy to:

- Discover the data, through AI-powered search that understands the meaning of user queries, even when they are imprecise, incomplete or in a different language, replicating the product search experience of an e-commerce site to provide relevant, targeted results. These should contain full details of what the data covers, its source and owner, presented in an easily understandable format. It should also recommend further data assets to encourage greater consumption. This increases confidence and trust, especially from non-experts.

- Visualize the data, through a full suite of data visualization tools that can be used to create interactive maps, informative dashboards, compelling graphics and understandable, relatable data stories. These have to be easy to use by non-specialists, and include drag and drop/no code options that accelerate the creation of visualizations within the organization. Tools should provide real-time previews to demonstrate the impact of changes and be able to create 100% responsive visualizations that fit all screen sizes.

- Consume and download the data, in a full range of formats, such as data products, text files or .CSV spreadsheets as well as commonly used formats such as .doc and .XLS. For more advanced users looking to automate access to data, provide APIs for each dataset. This allows users to link data to their own systems and makes it easy to download bulk data for detailed analysis. APIs also enable AI tools to automatically access and download data, without requiring human intervention.

6. The data platform foundations: mesh, virtualization, and warehouses

Successful data management requires a combination of strategy, process and powerful data management technologies.

It is essential to create a flexible, scalable and secure data stack that covers the end-to-end data management process across the organization. It must stretch from collection to consumption. This requires an overall data architecture and individual tools for data management within the stack.

Data architecture

How you structure your data architecture has a major impact on your data management stack. The data architecture sets out the infrastructure behind your data management program and is crucial to its success.

An organization’s IT architecture must allow it to share data and make it accessible to everyone. Data must be easily accessible, and not stuck in silos. However, if architectures are too centralized and concentrate activities within a central team, there is a risk that departments will not actively participate in data sharing, damaging your chances of success.

The data mesh model

Increasingly organizations are looking to adopt data mesh architectures. These are designed to underpin data democratization by decentralizing and federating responsibilities for particular data to those that are closest to them, but backed up by agreed, company-wide governance and metadata standards to ensure interoperability, with the architecture enabled by a shared self-service data infrastructure.

Rather than being a technology or tools, data mesh provides a framework and guidelines to help organizations work with data in the most optimized way, focused around three building blocks:

- Distributed data products owned by independent cross-functional teams which can contain both embedded data engineers and data product owners

- Centralized governance to ensure interoperability, consistency and security

- A common data infrastructure to host, enhance and share data

Data mesh makes it easier to find and share high-quality data and turn it into data products for internal or external use. To learn more about the architecture read our blog “What is data mesh and why is it vital to data democratization?”.

Security

Clearly data needs to be protected at all times, both from external and internal threats. Your data management policy and architecture must have security at its core. Effective data security management is therefore vital. Invest in data privacy management tools to keep information anonymous, and audit security at all stages of the data management process, from collection and storage to preparation and sharing. As part of security compliance, manage who has access to which datasets, providing a full audit trail and preventing unauthorized usage.

Data solutions

The data stack will contain a variety of data management products. It is essential that these all work together seamlessly to ensure interoperability, seamless processing and protection for your data. The data stack normally includes these data management solutions:

- Data creation: Systems creating data, such as CRM solutions for customer data management

- Data collection: Solutions that bring data together to make them easier to analyze. Data can be collected and stored in the same place, or remains in its original location by using data virtualization approaches. Data collection solutions include data warehouses, data lakes and data lakehouses, as detailed below.

- Data warehouse: A storage space solely for structured data. This makes it easier to manage, but makes exploiting data less flexible.

- Data lake: A storage space that contains all of an organization’s data in its raw form. This gives flexibility in analysis but can be difficult to govern.

- Data lakehouse: A hybrid model between a data lake and a data warehouse. This adds some structure to unstructured data to make it easier to find and use.

- Both data warehouses and data lakes require specialist skills and a high level of resource to implement and maintain, as our blog comparing the two technologies explains.

- Data management platforms (DMPs): These are software platforms that combine multiple technical tools in a single solution, with the aim of increasing efficiency and simplicity. They deliver a range of data management services through the same data management platform. Gartner highlights their increasing uptake as a new trend, but warns that they need to be combined with best-of-breed technologies such as data product marketplaces to guarantee success. There is more in this blog.

- Data catalogs: Traditional data catalogs provide an inventory of an organization’s data. However, they are technical tools that are aimed at data and IT experts, and merely offer an index of data. It cannot be directly accessed through the catalog.

- Business intelligence (BI) tools: Once data has been prepared data analysts use business intelligence tools to run reports and queries on it. BI tools are designed to be used by experts, meaning that the vast majority of people do not have the skills or training to operate them. This limits data democratization.

- Data visualization tools: Similar to BI tools, data visualization software allows users to turn tabular data into maps, dashboards and other graphical forms. These make it more compelling and understandable to users. However, as with BI tools, many data visualization platforms require expertise and training to be used correctly.

- Data product marketplaces: While organizations have invested heavily in their data stack in terms of both technology licenses and skilled experts to run their data management systems, they still struggle to get full value from their data. This is because their stack lacks a way of easily sharing data at scale with the wider business. Data product marketplaces overcome this consumption challenge.

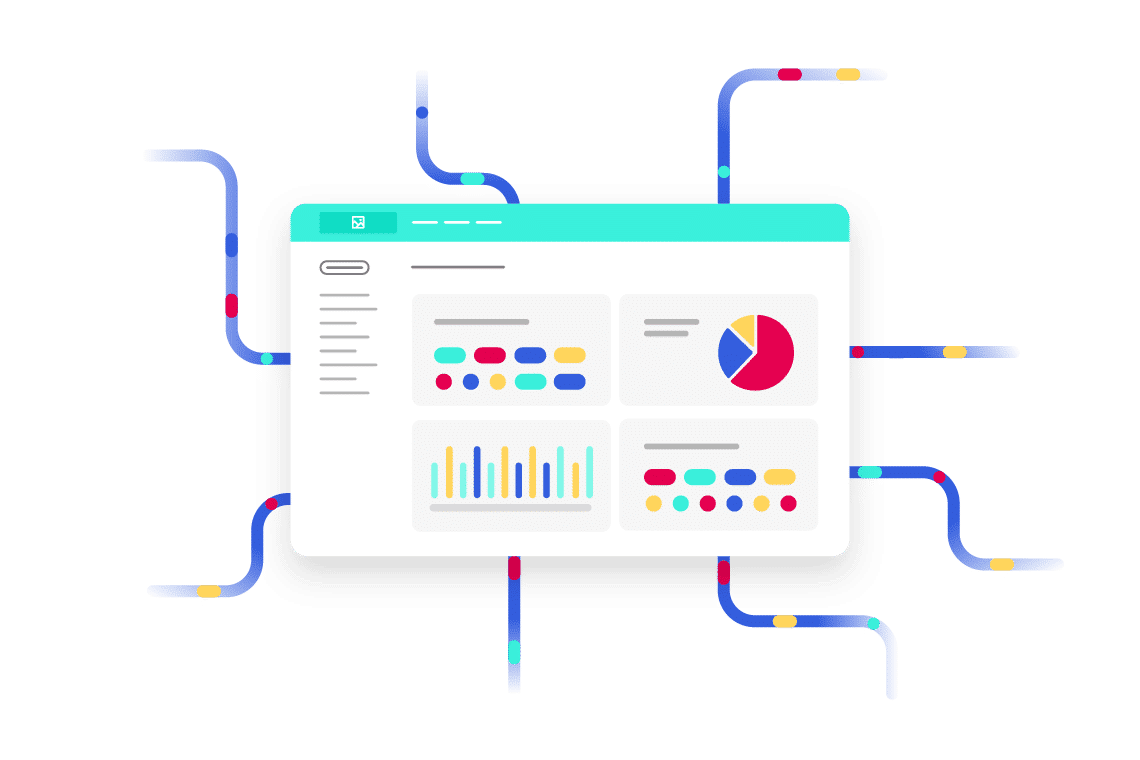

A data product marketplace sits within your data management software stack and includes key features that allow you to:

- Connect to all data sources to bring data together, wherever it has been created or stored

- Enhance data to improve quality by applying processors to standardize and enrich data with external datasets

- Publish data through powerful features that safeguard quality and make management and administration simple and straightforward. The solution should automate key tasks, such as the creation and sharing of metadata to increase efficiency

- Enable the discovery and consumption of data through an intuitive, e-commerce style, self-service experience, building trust and driving greater usage by business teams

- Centralize, distribute, and share data products with built-in data contracts, making them easily visible, discoverable and accessible at scale

- Visualize data through easy-to-use no code tools that mean non-experts can quickly create compelling data visualizations

- Share data assets in ways that promote greater reuse by all – from APIs for expert users to download through standard file formats to shareable links, available on open data portals, internal data marketplaces and partner portals.

- Ensure reliable, high-quality machine readable data is available to AI models and agents, with standardized metadata to enable discovery and consumption

- Analyze how data is being used and shared through data lineage capabilities. This helps understand data flows within your organization and therefore improve data management.

By ensuring data consumption at scale across the organization, a data product marketplace maximizes ROI on data management software investments. It underpins greater efficiency, collaboration and innovation, powered by seamless access to relevant data for all. It supports data management best practices and the creation of a data culture across the organization.

7.From data catalog to data marketplace: moving beyond metadata

Organizations need to build a full picture of their entire data estate, so that they can ensure that it is well-managed, protected and compliant. Historically they have achieved this through data catalog solutions, technical tools that use metadata to classify all of an organization’s data assets, providing an index and inventory.

While these solutions ensure governance and compliance, and give data experts a comprehensive view of the data landscape, data catalogs are not business-focused. They are complex tools that can only be used by technical teams, without an intuitive interface to make them useful to the business. They act as a metadata catalog, rather than providing direct access to data assets. They do not enable data consumption by the wider business.

Due to these limitations, organizations are increasingly looking at data product marketplaces to scale data consumption. These provide an intuitive, e-commerce style experience to business users, enabling them to search for, discover and access relevant data that meets their needs. Containing all the technical features of a data catalog (or integrating with existing solutions), they connect human users and AI with data through an engaging, self-service interface. This turns data into business value for all.

8. Preparing your data for the AI and generative AI era

Accurate, reliable data is essential to successful AI programs. Issues such as poor quality, unavailable, or untrustworthy data were identified by 93% of executives interviewed by Wavestone as the biggest barrier to their AI success. Failure to correctly prepare data can lead to poor AI decision making, potential bias, inaccurate results, security issues and compliance failures. AI data management has to be at the heart of CDO strategies.

Ensuring that data foundations are in place is vital. Seamless and rapid access to a wide variety and volume of high quality, reliable, timely, trustworthy and comprehensive data is therefore critical to AI success, both for training Large Language Models and powering agentic AI.

The 6 foundations of an AI-ready data program

1 Create a clear data strategy

Audit your current data landscape, gaps and actions to be taken to optimize data for specific AI use cases. Ensure that data is quantified and meets standards around semantics and quality, and is AI-ready in terms of labelling, metadata, bias mitigation and lineage. Focus on the most pressing AI use cases and the data required to support them.

2 Build and deploy data products

Data products are the optimal means to share data at scale with AI and humans. Bring together and enrich multiple datasets and make them accessible through an intuitive interface as machine-readable data products. This delivers context, accessibility, trust, lineage, accountability and observability around AI.

3 Provide seamless access to data through a data product marketplace

Data assets must be easily discoverable and accessible across the business if they are to be used. Centralizing data and making it available through a secure, self-service data product marketplace is vital. It provides a single source of truth and acts as the consumption layer for AI-ready data, while ensuring lineage, governance and transparency.

4 Extend existing data governance

AI-ready data requires existing governance processes to be extended to ensure that it sets and enforces standards around areas such as metadata, and the responsible and ethical use of data, particularly personal data, to train AI models and power the actions of AI agents.

5 Put the right technology stack in place

Organizations have invested heavily in their data infrastructure. However, in many cases this has created complex data management stacks, with multiple, often overlapping tools. Combining different data management solutions through the deployment of data management platforms provides clarity and savings, but must be integrated with best-of-breed data product marketplace solutions to deliver accessible, consumable data assets to AI and human users.

6 Work closely with the business

Collaboration between data and IT teams and the wider business is essential to AI ROI. Data product marketplaces facilitate this teamwork by providing a centralized

space to share, govern, consume, manage and consume data through an intuitive

experience that meets the needs of all groups. Users can give feedback and rate

data, while data owners can answer queries and administrators grant access to specific data products for relevant users and AI.

9. Examples of customers that manage the data lifecycle with Huwise

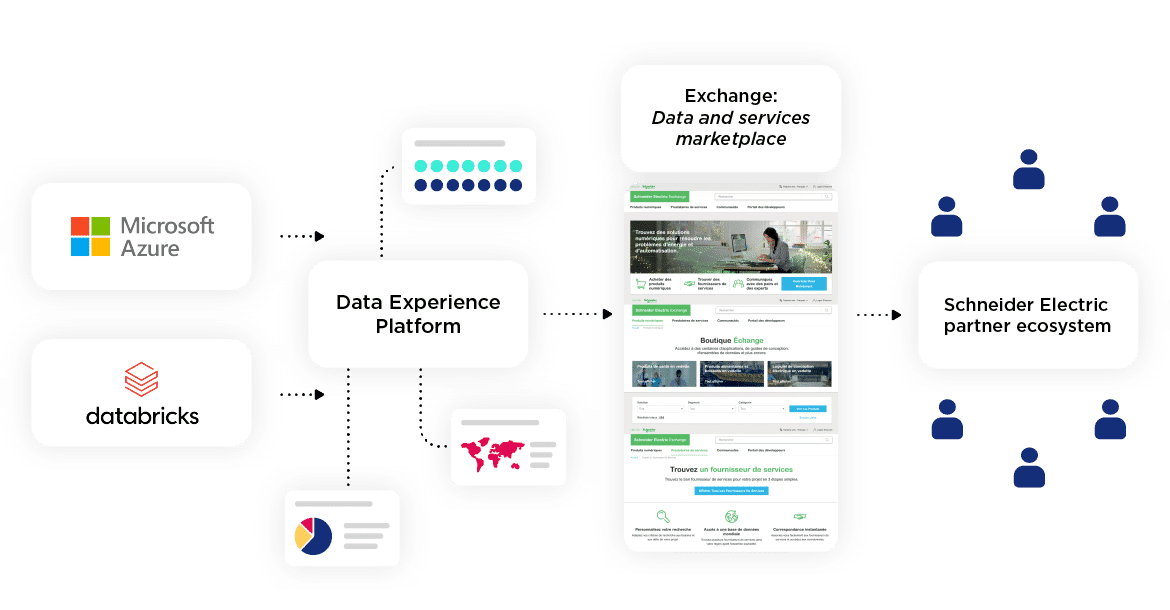

Schneider Electric – increasing internal and external data consumption

Schneider Electric uses a range of solutions to manage data across its lifecycle, ensuring it meets governance and sharing requirements. This includes cloud storage through Microsoft Azure and its Databricks data lakehouse solution. These feed data into the Huwise data product marketplace, where Schneider Electric creates compelling data assets, products, and visualizations shared through its Exchange data and services marketplace.

Ministry of Sport – data management to improve decision-making

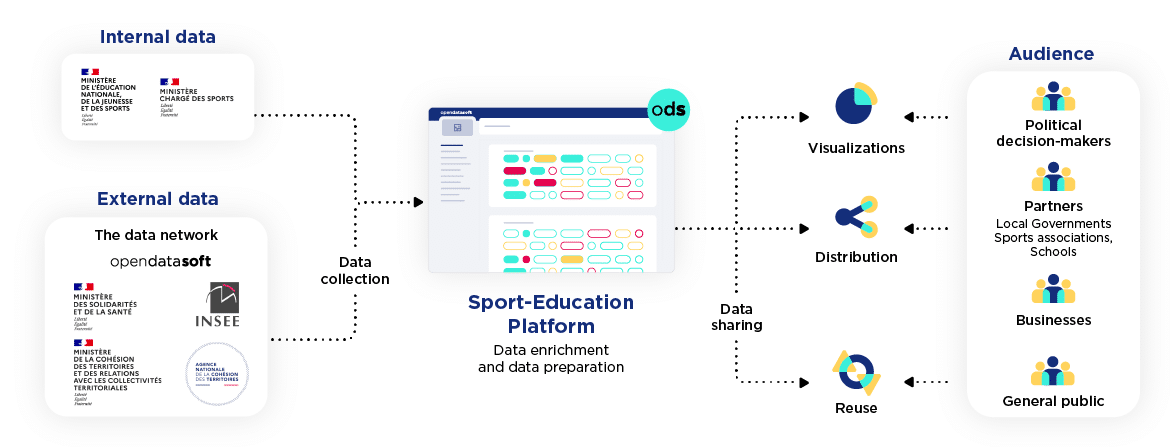

The French Ministry of Sport collects a range of internal and external data, from government agencies including the Ministry of Health and the French National Statistics Authority (INSEE). These are all centralized within the Huwise marketplace where they are prepared and enriched. Data is then shared through visualizations and reuses with political decision-makers, partners, businesses and the general public.

Lamie mutuelle: centralizing data to enable sharing

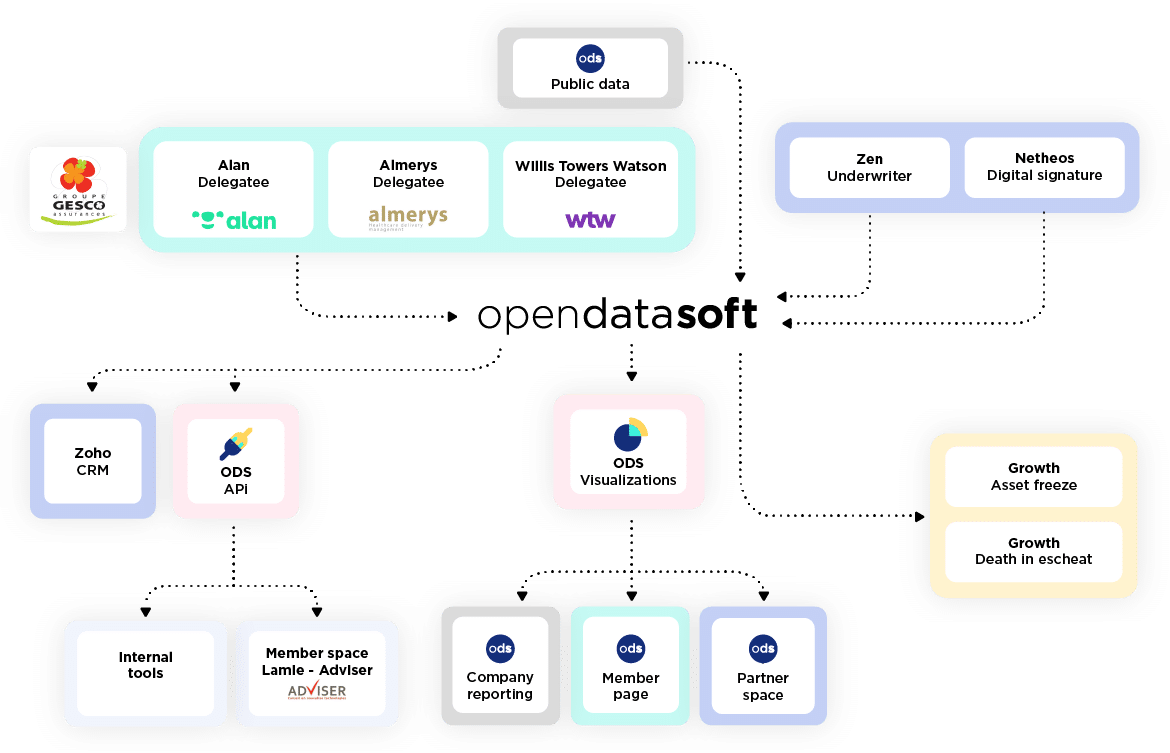

Insurance company Lamie has made Huwise the cornerstone of its data management strategy. All data, whether collected internally or externally by partner organizations, is centralized in its Huwise data product marketplace. It is then shared via data products such as dashboards for employees, with its Zoho CRM solution and other internal systems via APIs and with customers through its dedicated customer and B2B marketplaces.

FAQs

-

Data management refers to the strategy, processes, and technology tools used to manage and protect an organization’s data. It covers the collection, processing, storing, sharing and use of data internally within the organization and externally across its ecosystem.

Data management began as a reactive discipline that looked to control the growing volumes of data created and collected by organizations. The aim was to reduce risk, lower costs, enable compliance with regulations such as the GDPR, and protect data securely.

However, organizations now recognize the proactive benefits that effective data management brings. Chief Data Officers (CDOs) and other leaders aim to harness data to deliver business value, such as by improving decision making, boosting productivity, enabling innovation and underpinning AI. All of these data management benefits rely on scaling data consumption across the organization, providing relevant data to human employees and AI in a seamless, understandable way.

-

Data management refers to the entire discipline and practice of managing all data within an organization. By contrast master data management (MDM), covers how data is created, shared, updated and consumed. Master data is non-transactional data that is used to provide context to transactional data by describing it and making it easier to understand and manage. For example, it covers entities such as product names, customer formats or how you describe your financial structures or offices. Master data does not refer to individual transactions but instead describes and helps categorize them. Companies use master data management tools to ensure their master data is consistent across all of their organization.

-

A data product marketplace is a centralized, self-service, one stop shop for all of an organization’s data assets, including data products. It is designed to drive data consumption at scale. Through its intuitive, e-commerce style interface it enables every user (human and AI) to discover, access, and consume data with confidence, without requiring specialist data skills or support.

Data is made available in understandable, accessible ways, such as through highly consumable data products, visualizations (including dashboards, maps, graphics and data stories) and via APIs and common download formats. It features built-in granular access management capabilities to protect and secure information and meet governance and compliance needs. Data discovery is aided by AI-powered search, comprehensive metadata management features and an integral business glossary that ensures users can confidently access the data they need. Data lineage capabilities enable administrators to easily track where data is being used, driving a culture of continuous improvement around consumption.

-

Data product marketplaces and data catalogs may appear to do the same job, but they are different in multiple ways. Essentially, data catalogs provide a centralized inventory of data for technical experts, while data product marketplaces make data available at scale for consumption across the business and by AI. Key differences are:

- A data catalog identifies, organizes and describes the organization’s data assets through comprehensive metadata. It provides an index to an organization’s data – the data cannot be accessed directly through the catalog. In contrast, a data product marketplace enables users to access the data itself, as well as providing a full inventory, either through its own built-in catalog or via integration with an existing data catalog solution.

- Data catalogs are complex, technical tools, requiring specialist training and skills to understand and operate. Data product marketplaces are built on an intuitive, e-commerce style interface so that they can be used by anyone, without requiring training or support.

-

Internal data product marketplaces make data assets easily available to the entire organization. This drives consumption by humans and AI, and turns data into real business value. Internal data product marketplaces centralize all data products and assets, eliminate internal data silos, simplify information and knowledge sharing and accelerate internal AI deployment by training models and agents with high quality machine-readable data.

Internal data product marketplaces have multiple uses across the organization:

- Providing a single source of truth around key metrics such as sales and customers

- Maximizing efficiency by centralizing all data and eliminating data duplication

- Boosting productivity and underpinning more data-driven decision-making

- Increasing collaboration between teams through data sharing

- Freeing up data team time and resources through data self-service

- Enabling innovation, including new business models and services

-

A data exchange enables the secure, controlled, self-service sharing of data assets, products, and services between different organizations. They are also referred to as a B2B data product marketplace, data space or partner data marketplace.

By sharing data across organizational ecosystems, data exchange platforms deliver multiple benefits:

- They enable greater collaboration by breaking down silos between organizations

- They increase efficiency by automating data sharing, such as across supply chains

- They underpin better decision-making and analysis through access to more comprehensive, accurate and contextualized data

- They enable better data governance, including through monitoring and reporting

- They automate regulatory compliance, increasing efficiency while ensuring organizations comply with legislation such as the GDPR and CCPA

- They enable organizations to gain a deeper view of their activities, and meet reporting needs in areas such as sustainability and emissions reduction

- They provide the framework to monetize data assets, providing it securely through self-service to existing and new customers

-

Successful AI relies on access to reliable, accurate, comprehensive, and contextualized data. Data marketplaces provide a centralized, trusted and machine-readable repository of data, especially data products, that can be consumed by AI in multiple ways, such as through APIs:

- Large Language Models (LLM) can be trained on data from the marketplace, ensuring they provide outputs that are accurate, unbiased and broadly applicable across use cases

- AI agents (agentic AI) can access data within the marketplace to take better informed, real-time actions and decisions

- Generative AI can search data within the marketplace to provide users with relevant, accessible information in easily consumable forms, such as visualizations. Users simply ask a question through a chatbot-style interface to receive a reliable, accurate response

In all cases, the marketplace’s data lineage features provide a full audit trail of data consumption, while granular access management capabilities protect data security. This ensures regulatory compliance and ethical AI.

As well as supporting AI projects, data product marketplaces also incorporate AI to better serve users, including through AI-powered search and discovery, similar data recommendations, chatbot-based visualization creation and agentic AI capabilities.